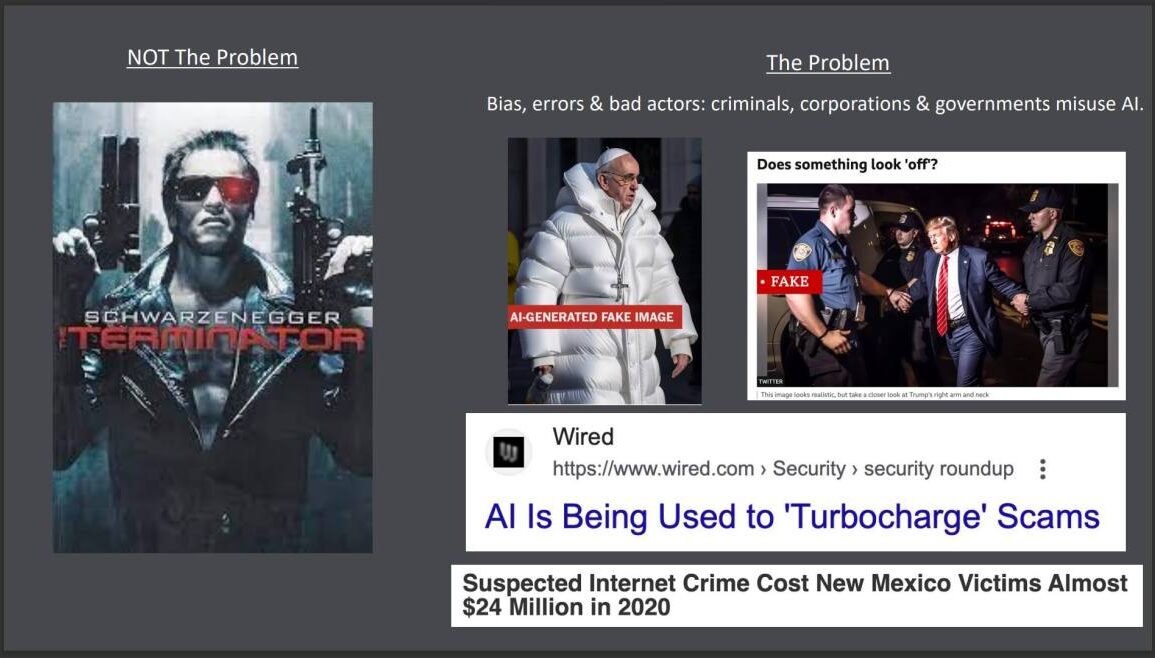

Separating fact from fiction has become harder in the 21st century in part due to the proliferation of artificial intelligence and its current uses.

Some people use AI to scam or trick their victims into seeing something that is not real but looks real enough so as to not be immediately questioned.

Recently, AI was a topic discussed at the state level, by a U.S. Senate committee and the White House.

U.S. Sen. Martin Heinrich, D-New Mexico, and other senators introduced the Creating Resources for Every American to Experiment with Artificial Intelligence Act of 2023, known as the CREATE-AI Act

“We know that AI will be enormously consequential. If we develop and deploy this technology responsibly, it can help us augment our human creativity and make major scientific advances, while also preparing American workers for the jobs of the future. If we don’t, it could threaten our national security, intellectual property, and civil rights,” Heinrich said in a press release. “The bipartisan CREATE AI Act will help us weigh these challenges and unleash American innovation by making the tools to conduct important research on this cutting-edge technology available to the best and brightest minds in our country.”

Heinrich said it would “help us prepare for the future AI workforce” for various industries across the United States.

The act would create the National Artificial Intelligence Resource that would provide AI researchers and students with better access to resources, data and other tools they need to develop safe and trustworthy AI.

The Act also establishes the National Artificial Intelligence Research Resource (NAIRR) as a shared national research infrastructure that provides AI researchers and students with greater access to the complex resources, data, and tools needed to develop safe and trustworthy artificial intelligence.

Heinrich founded and serves as co-chairman of the Senate Artificial Intelligence Caucus.

On July 22, President Biden announced that he met with seven leading AI companies who all gave voluntary commitments to make AI technology safer, more secure and more transparent.

These companies were Amazon, Anthropic, Google, Inflection, Meta, Microsoft and OpenAI.

“These commitments are real, and they’re concrete,” Biden said in a press conference on June 22. “They’re going to help the industry fulfill its fundamental obligation to Americans to develop safe, secure, and trustworthy technologies that benefit society and uphold our values and our shared values…We’ll see more technology change in the next 10 years, or even in the next few years, than we’ve seen in the last 50 years. That has been an astounding revelation to me, quite frankly. Artificial intelligence is going to transform the lives of people around the world.”

In October 2022, Biden announced an AI Bill of Rights with the Office of Management and Budget releasing a memorandum with AI usage guidance the following month.

“When considering regulations or policies related to AI applications, agencies should continue to promote advancements in technology and innovation, while protecting American technology, economic and national security, privacy, civil liberties, and other American values, including the principles of freedom, human rights, the rule of law, and respect for intellectual property,” the memo stated.

State level discussions

The New Mexico Interim Legislative Science, Technology and Telecommunication Committee meeting last week also discussed AI and its issues.

The committee focused on a type of AI known as generative AI. This type of AI is used by establishing algorithms that are used to make new content, such as ChatGPT and DALL-E.

“The real concern with generative AI is that it already is very good at producing convincing stories that sound true, that sounds convincing, but are in fact, just based on falsehoods, either intentionally or not intentionally,” University of New Mexico Computer Science Department professor Lydia Tapia, Ph.D. said. “There’s a good bit of research that suggests that humans like certain kinds of information, certain kinds of stories that are not too complicated, but not too simple; and often truth is way more complicated than people would like to hear. So building fiction that people will believe is actually easier than building true stories.”

The discussion included the remote possibility of AI becoming sentient, as depicted in science fiction like the movie “Terminator,” in which an AI defense network becomes self-aware and brings on a nuclear war to destroy humanity.

“I think that’s sort of the history of technology that the really big consequential questions, right, can we prevent the Terminator from killing us all, we’ll probably be able to do that if it’s ever a possibility,” UNM Computer Sciences Department professor Melanie Moses, Ph.D. said at the meeting. “It’s really the smaller insidious pieces of this that, I think, we need to pay attention to, where incentives and regulations really can help us to navigate the use of this new technology that we haven’t had much experience with until this point.”

Moses described the proliferation of AI to the technological advances from 80 years ago that led to the investigation of the atomic bomb and all of the aftereffects.

“There is also the concern of the ripple effects, right, when this technology was suddenly released into the world, it dramatically changed the course of history,” Moses said. “We have very fortunately, regulated at least the use of nuclear bombs, right, and probably ways that people thought we would never manage to do at least thus far. The side consequences, right, the peripheral consequences, things like uranium mining and, you know, destruction of native lands and the health of populations. All of those things are a little harder to deal with.”

The committee also heard from Interim Director and Associate Research Professor Center on Narrative, Disinformation and Strategic Influence at Arizona State University Joshua Garland, Ph.D. about his suggestions for curbing disinformation.

Garland spoke about the erosion of trust in the current state of disinformation in our media consciences and its effects on the electorate.

“Disinformation erodes trust in socio-political institutions,” Garland said. “That’s the fundamental fabric of our democracy: Legitimate news sources, scientists, experts and fellow citizens have to be able to be trusted, so that I can come to you about things I don’t know and get real, truthful information so that I can form an educated opinion… so that I can vote on what I believe the country’s direction should be. Uploaded information environment results in the loss of a shared reality, and an Information Island. This is what’s occurred in our country. So we’ve had enough disinformation in our country, we now have a loss of a shared reality.”

Garland made some recommendations to the committee on how to combat misinformation and/or disinformation created using AI.

These recommendations are to create digital media literacy programs which would allow the media/data consumer to “access, analyze, evaluate, create and act using all forms of communication”, incorporate digital media literacy in K-12 school programs, make digital media literacy programs freely available to all New Mexicans and regulate the use of AI in political campaigns and other political communications, Garland said.

AI also affects industries including the entertainment industry.

The Writer’s Guild of America, which represents TV and movie writers, went on strike May 2 and, to date, are still on strike. The Screen Actors Guild-American Federation of Television and Radio Artists, which represents TV and movie actors, joined them on July 14. The first time since 1960 that both unions went on strike simultaneously.

Although much of the two strikes are about residuals from streaming services, one of the growing concerns is AI’s use in film.

Santa Fe resident George R.R. Martin, author of the “Song of Ice and Fire” book series which were adapted into the HBO series “Game of Thrones” spoke to the committee.

“Technology keeps going and I’ve experienced every part of it and AI is something people, particularly in my community,… (have) seen coming for a long, long time and there were two, kind of, related issues that we could touch (on) here today,” he said. “One is what AI is going to do tomorrow. Both SAG and WPA are on strike now and one of the things we need to settle is that the producers and AMPTP are digging in their heels and refusing to even discuss is what regulations will run AI (and) how our careers and Hollywood are going to continue in the future.”

He said they need to discuss not just the use of AI in the near-future, but the far-future

Another industry affected by AI is the criminal justice system.

The issues are in methods and algorithms that are already in use across the country, Santa Fe Institute professor Cristopher Moore said.

He is also a member of the Interdisciplinary Working Group on Algorithmic Justice.

“My feeling about the use of these algorithms is that they’re neither good nor evil,” Moore said. “I think in some cases, they can help, in fact, but I want them to be transparent. I want people to know what information about them is being used and how it’s being used. I want that not just for the applicants, but also for the human decision makers that are being advised by these things and also for policymakers like you who are trying to decide whether these things should be deployed and used, especially in the public sector.”

In New Mexico, judges use an algorithm called the Public Safety Assessment developed by the Arnold Foundation. It is commonly called the Arnold tool.

“Interestingly enough, the designers of the PSA, the folks at the Arnold Foundation, do not want us to use it to make the decision to detain or release and there was a lot of discussion about that in this last legislative session,” Moore said. “So the way it’s phrased now is, ‘Hey, judge, use the constitutional process to make that decision, detain versus release. If you choose to release, then we’re advising you on, maybe, what level of supervision you should place this department under’.”

The PSA is available for free from the Arnold Foundation.

Moore would like the legislature to “require transparency and auditability for any algorithm that state or local governments use to make or inform life-altering decisions.”

So far, the only AI-based legislation enacted in New Mexico was contained within an additional appropriations bill during the 2023 legislative session that added $290,000 for AI equipment for ASK Academy charter school in Rio Rancho.

The committee met to discuss current issues with AI as a preliminary to potential legislative proposals for the upcoming 2024 session.

This post was originally published on this site be sure to check out more of their content.